Affiliate marketing may look simple from the outside—but it’s anything but easy.

Many newcomers jump in with a “let’s just try it” mindset. They launch ads hastily, make decisions based on gut feelings, and pause or scale campaigns just because a few numbers went up or down.

Affiliate marketers fail for all kinds of reasons—bad product choices, confusing ad platforms, weak landing pages.

But one of the most common (and costly) reasons?

Acting too soon—before the data has time to speak for itself.

In affiliate marketing, there are many skills you need: writing ad copy, choosing offers, building landing pages, and scaling campaigns.

But if you can’t read and interpret your data correctly, none of those skills will matter. You’ll end up making the wrong moves—and losing money on campaigns that could have worked.

This post doesn’t offer gimmicks. No “get-rich-quick” hacks.

But it will walk you through the fundamentals—and if you understand them well, you’ll avoid 90% of the common mistakes affiliates make when optimizing their campaigns.

Table of Contents

ToggleWhy You Shouldn’t Trust Early Results in Affiliate Ad Campaigns

Imagine flipping a coin five times.

And all five times, it lands on heads.

What would you say?

“Wow, this coin always lands on heads”?

It might sound reasonable—but deep down, you know that’s not true.

In reality, the chance of getting heads is 50%. You just haven’t flipped it enough times. You’re drawing conclusions from a tiny data set—one that doesn’t represent the bigger picture.

Affiliate marketing works the same way.

You launch a campaign and set up two ad creatives.

After one day, Ad A gets 2 conversions. Ad B gets none.

You think: “Looks like A is the winner,” and turn off B.

But if you had waited two or three more days… maybe Ad B would’ve pulled ahead.

This is something many affiliate marketers don’t realize:

When you’re just starting a campaign and see those first few results, don’t trust them too quickly. It’s just like flipping a coin a few times—early outcomes don’t tell you the whole story.

Why Statistical Significance Matters in Affiliate Testing

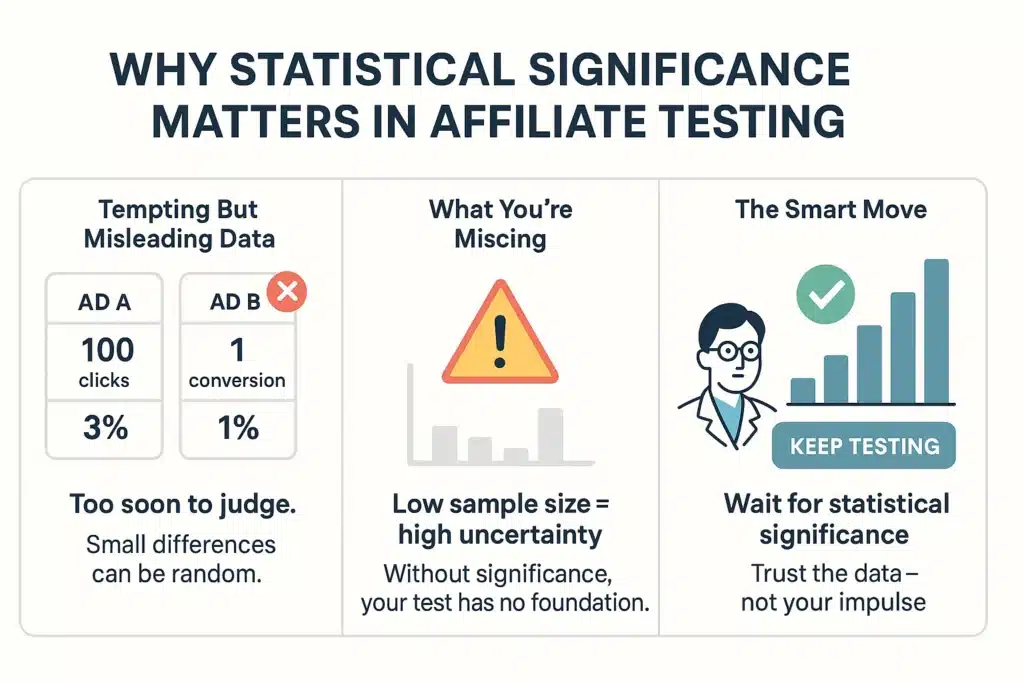

When you’re comparing the performance of two ad creatives, the key question is: Can you trust the result?

Let’s say:

Ad A gets 100 clicks and 3 conversions (3% CTR).

Ad B also gets 100 clicks, but only 1 conversion (1% CTR).

You’re thinking of turning off Ad B?

Hold on.

That result isn’t solid enough to say Ad A is truly better than Ad B.

What you need is statistical confidence. If your sample size is too small, the difference might just be random noise.

Until the results are statistically significant, keep driving traffic.

Wait until the data is trustworthy.

If you don’t, you risk making the same mistake many others have: Killing a potentially winning ad—just because you moved too fast.

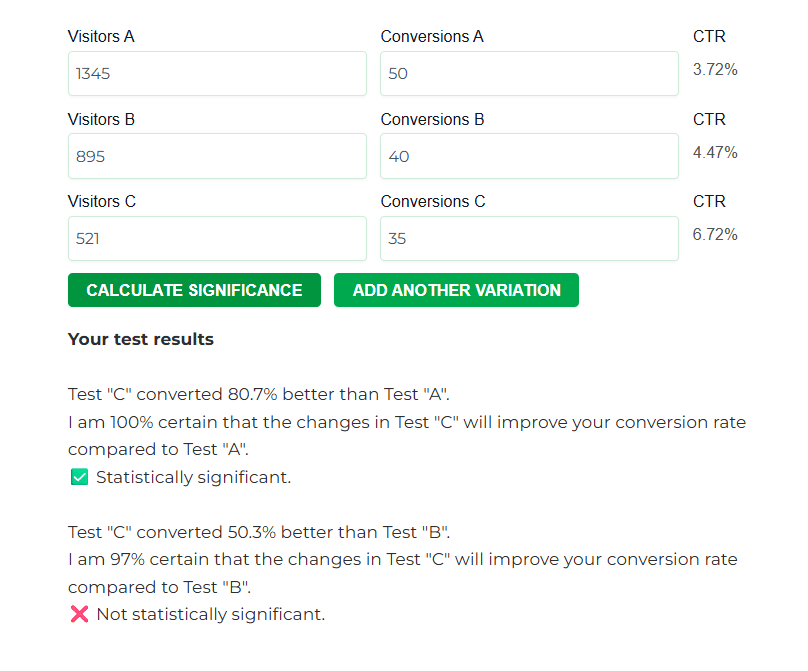

How to Know If Your Test Results Are Statistically Significant

You don’t have to guess.

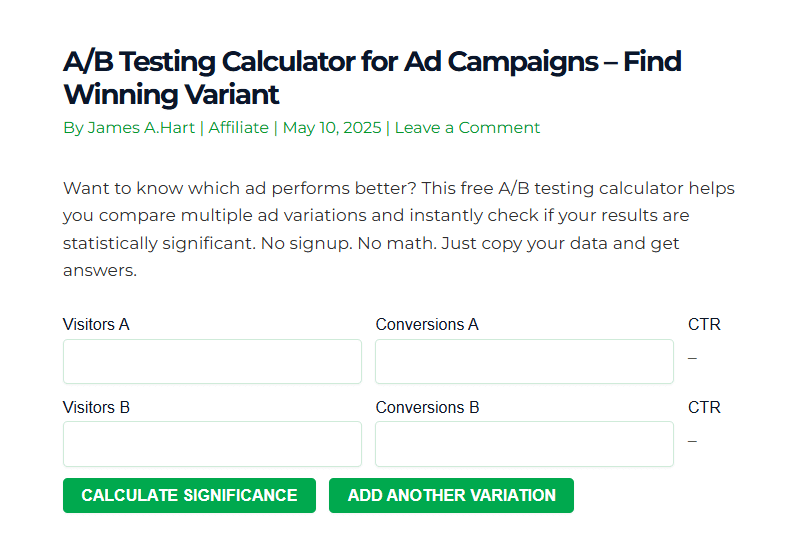

Just use a simple A/B testing calculator I built here:

A/B Testing Calculator – James The Marketer

All you need to do is enter:

- The number of clicks

- The number of conversions for each variation

…and the tool will show you the CTR, the performance gap between variants, and the statistical confidence.

If it hits 95% confidence, you’re good.

This helps you avoid shutting down a promising ad just because of… random chance.

You can also use this method to test many other variables in your affiliate campaigns—like landing pages, offers, headlines, images, and more.

Want to test more variations?

Just click “Add another variation”—the tool expands as you go.

Common Mistakes in A/B Testing for Affiliate Marketing

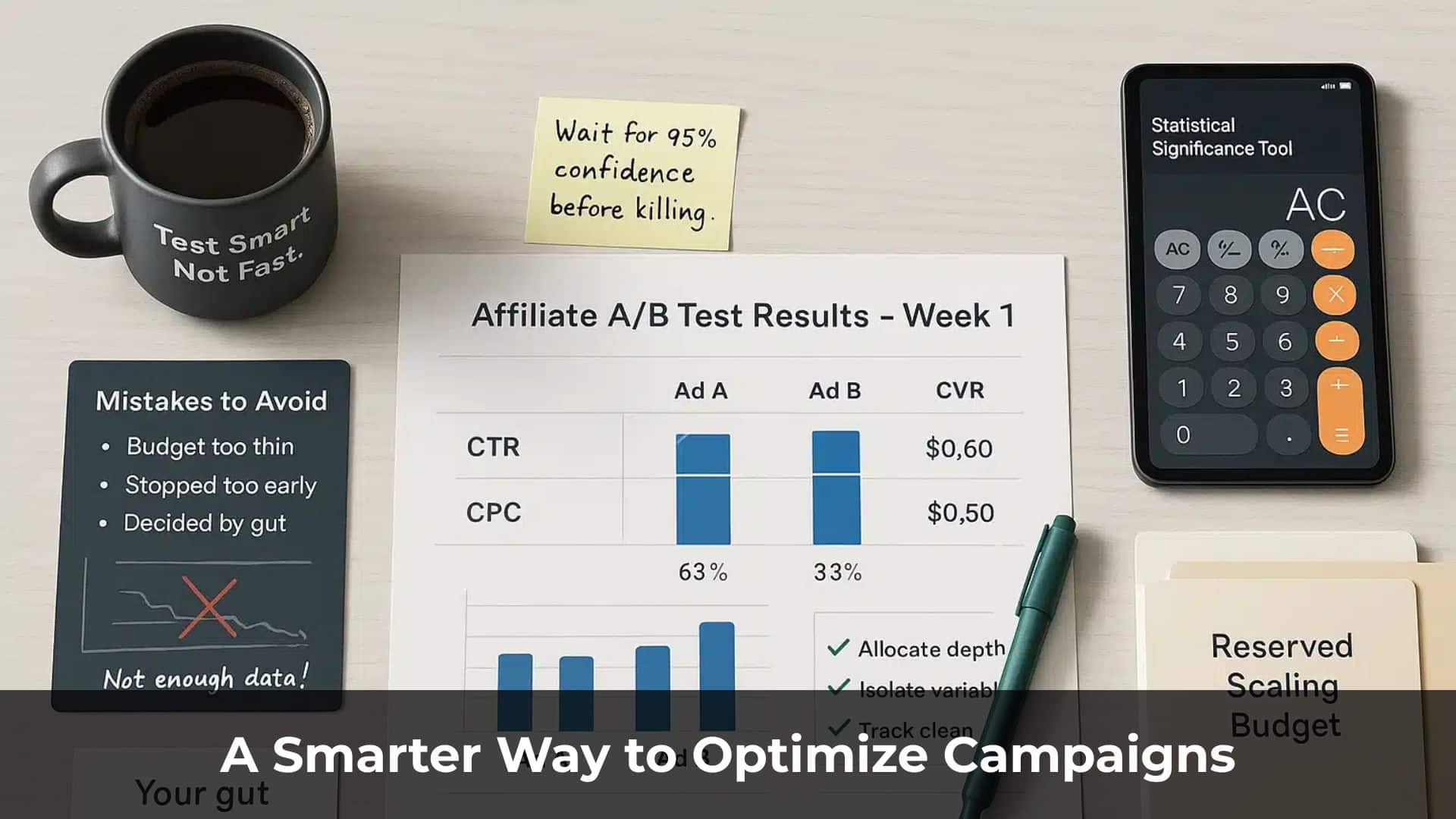

By now, you understand how crucial statistical significance is.

Next, let’s walk through some of the most common A/B testing mistakes affiliates make—so you can avoid them and reduce your chances of failure.

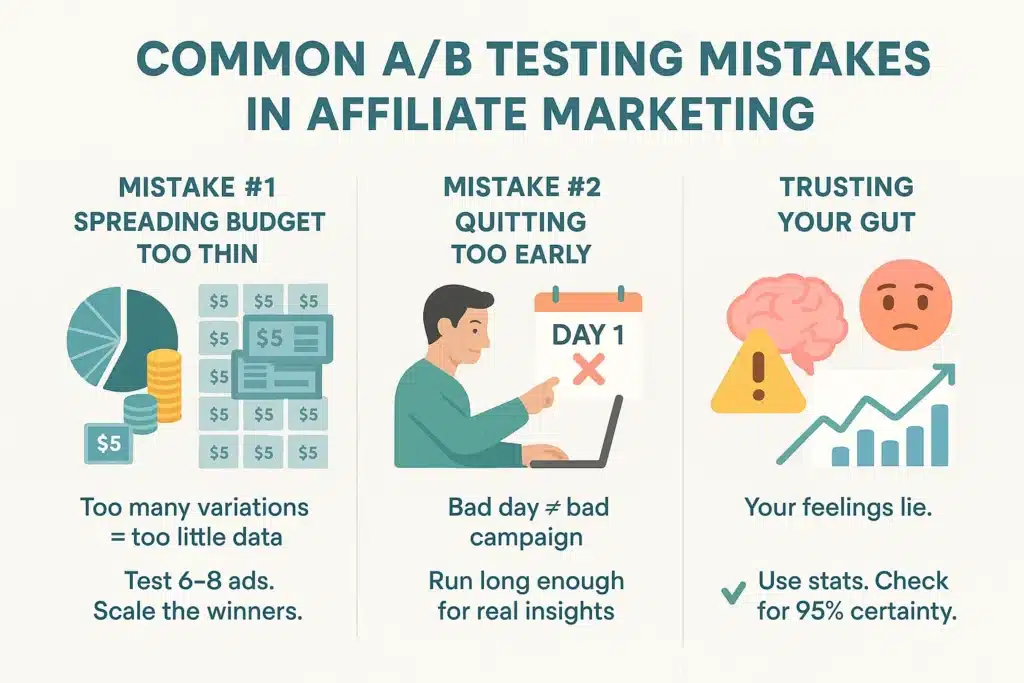

Here are three major mistakes—even experienced marketers fall into these traps.

Mistake #1: Spreading Your Budget Too Thin Across Too Many Variations

Let’s say you have a $200 budget for testing ads.

Sounds decent. But if you split that across 40 different creatives, each ad only gets $5 worth of traffic.

That’s not enough to generate reliable data. A few scattered clicks don’t mean anything.

Too many variations + too little budget = meaningless data

Instead of spreading your budget thin, be selective.

Start by testing 6–8 variations. Identify which ones perform best, then move to the next round of testing with only the winners.

Mistake #2: Killing a Campaign Just Because It Lost Money on Day One

Newbies often panic if their affiliate ads don’t convert in the first 1–2 days.

But here’s what they don’t realize:

- Some niches only convert well on weekends

- Certain times of day perform much better than others

If you shut off a campaign on Wednesday because it’s losing money, you’ll never know whether Friday and Saturday might’ve been profitable.

A bad day doesn’t mean a bad campaign.

Look at performance by the week—not the day.

Pro tip: Facebook Ads and Google Ads often take time to gather enough data and optimize delivery. This “learning” phase can last several days (or longer).

So don’t pull the plug too early. Let the campaign run long enough to collect valid data—just make sure to set a clear daily budget cap.

Mistake #3: Making Decisions Based on Gut Feeling

You look at your stats and say, “I have a feeling this ad is better.”

Sorry, but gut instinct isn’t a reliable strategy.

Human bias is deceptive—and without enough data, your judgment will often be wrong.

No matter how experienced you are, you can’t feel which variation has a 95% chance of winning.

You need to calculate it.

You need clarity—not guesswork.

Affiliate marketing isn’t just art.

It’s part math.

So when you’re analyzing performance, don’t trust your gut—trust the data.

How to Allocate Your Budget for Effective Campaign Testing

One of the most common questions beginner affiliates ask is:

“With my current budget, how many ad creatives should I test? How many landing pages? How many offers?”

The answer?

There’s no fixed number.

But there are principles—and if you follow them, you’ll avoid spreading your budget too thin or burning through it too fast.

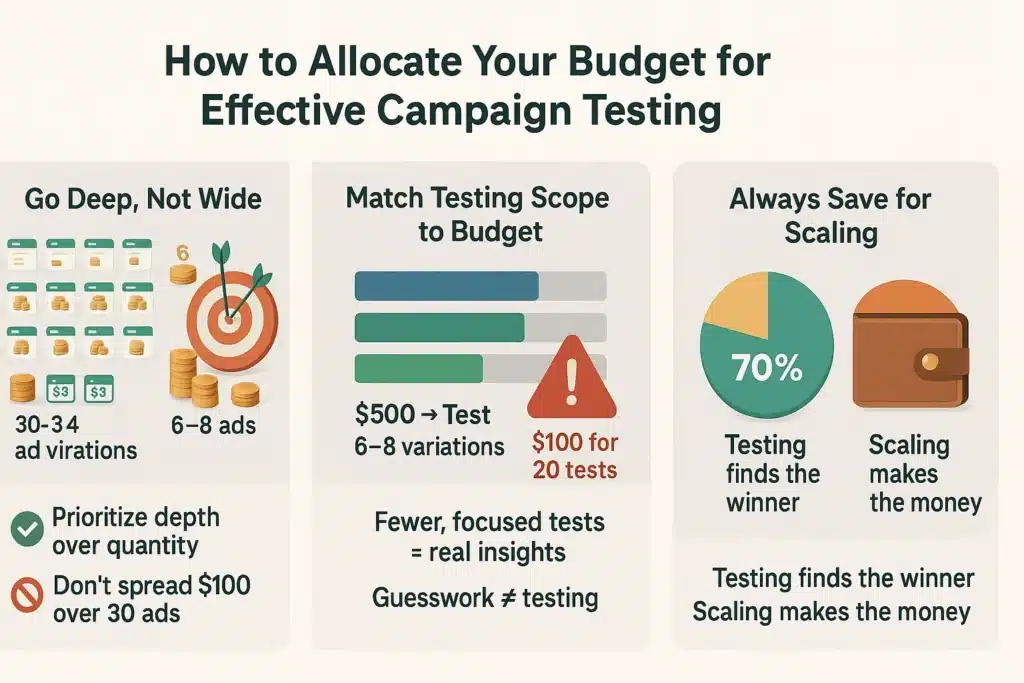

Principle #1: Prioritize Depth Before Testing More Variations

Don’t test 30 ad variations just to feel productive.

Instead, choose the most promising ones and test deep enough to get statistically valid results.

To achieve meaningful insights, you’ll need enough:

- Clicks (at least a few dozen to a few hundred, depending on your niche)

- Impressions (especially if you’re running on CPM)

- Conversions (aim for at least 5 or more per variation)

If your budget isn’t large enough to go deep on each variable, don’t try to test too many things at once. Focus on fewer tests with better data.

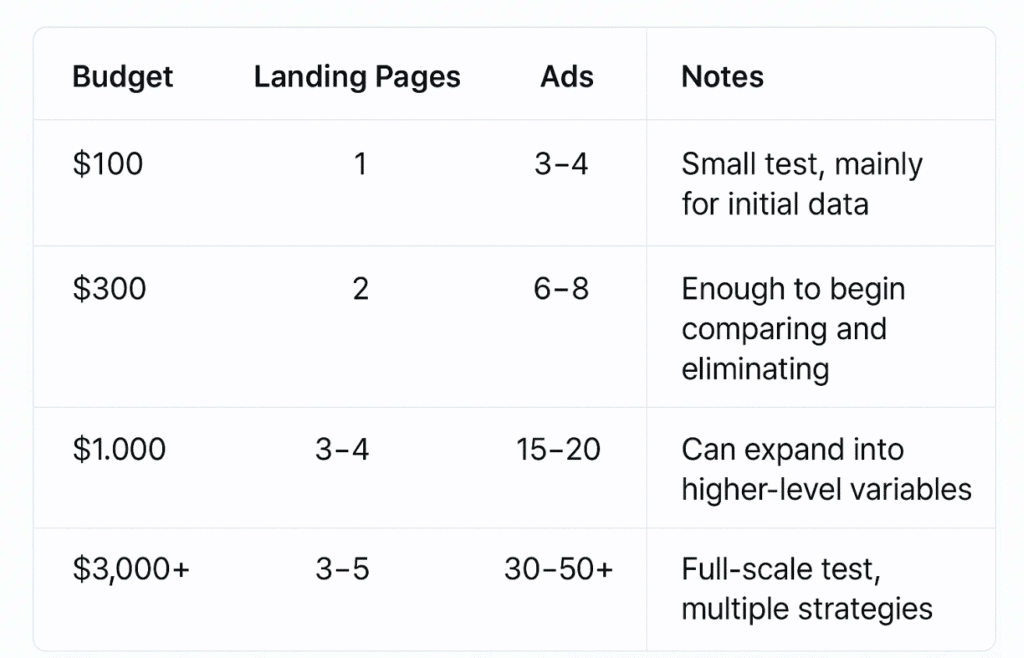

Principle #2: Match the Scope of Testing to Your Budget

Here’s a rough guide to help you visualize how to allocate your budget:

Note: This isn’t a rigid formula—but if you’re using $100 to test 20 different ads, you’re not testing. You’re guessing.

It’s better to run fewer, well-funded tests than dozens of half-baked ones.

Principle #3: Always Reserve Budget for Scaling

Here’s a classic mistake many affiliates make:

They blow their entire budget on testing.

After all the testing, they finally find a winning ad and a high-converting landing page— But now their account balance is $0.

No money left to scale.

No chance to capitalize on what actually works.

Always remember: The point of testing is to discover what works—not to spend everything discovering it.

Think of your budget like a pie:

- Allocate 50–70% for testing

- Save 30–50% for scaling your best-performing assets

If you finish testing and have no capital left to scale, you’ve only done half the job. You paid to find the right answer—but now you have nothing left to act on it.

The A/B Testing Calculator That Helps You Make Smarter Decisions

Affiliate marketing isn’t just about running ads.

You’re working with data—and you need the right tools to read that data accurately.

Some numbers can’t be guessed or “felt” with intuition.

You need to know, with clarity:

- What’s the current conversion rate?

- How do the ad variations compare?

- Can you trust the result with 95% confidence?

So how do you calculate all that?

There are plenty of tools online—but honestly, I wasn’t satisfied with any of them.

So I built my own: a simple A/B Testing Calculator.

You can use it here: A/B Testing Calculator

I mentioned this tool earlier in the post—but it’s so important that I want to explain it again, especially for newbies who might still be unclear.

This tool helps you:

- Calculate the CTR (Click-Through Rate) for each variation

- Compare different variations and identify which one is performing better

- Check for statistical significance so you know how reliable your data is

- Get a clear verdict: “Are you ready to make a decision—or do you need more data?”

How to use it:

- Enter the number of Visits and Conversions for each variation (A, B, C, etc.)

- Click Calculate

- Review the results—if the confidence level hits 95% or more, you can act with confidence. If not, keep the campaign running and gather more data.

Don’t make big decisions based on instinct.

Let the data guide you.

A Few Final Words…

Affiliate marketing isn’t a game of luck.

And it’s not a place for vague instincts or gut-based guesses.

In this game, you win by observing carefully, analyzing thoroughly, and making decisions at the right moment.

The better you understand your data, the calmer you’ll be when early results roll in.

And the less money you’ll waste chasing false signals—bringing you closer to real, sustainable profits.

If you found this post helpful, feel free to bookmark it, share it, or send it to a teammate who’s also walking the affiliate path.

It might just help them avoid an unnecessary—and costly—mistake.